Note: This blog post is a transcript of my talk at the Archaeological Park of Paestum for Paestum Tech Days. The conversational tone and simplified explanations reflect the original presentation style, aimed at making computer graphics concepts accessible to everyone, regardless of their technical background.

Okay, let's start with a nice question: How do we see? With the eyes. The eyes are a marvel of nature. They are light sensors. They capture light and send a signal to the brain, which we interpret as our internal representation of the reality around us. Wonderful.

What is light? This second question is a bit more difficult. A didactic definition would be: a collection of photons. It is electromagnetic radiation — a variation of the electric and magnetic fields in space and time. It is a flow of particles and waves. The particles are photons. It has a dual nature: it has both a particle nature and a wave nature. Let's say it bounces like a ball, but it waves like the sea. It is energy that travels, so much so that we can capture it with solar panels to power our homes.

Yes, it's difficult. In fact, you need to be Einstein to have the genius idea to define light as a flow of photons, which are packets of energy. For example, a 60-watt light bulb emits 800 billion photons per second. And that's a lot. But what is the main source of photons that reaches planet Earth? The sun. How many photons does the sun emit per second? Many. It's such a large number that it's unimaginable.

How Does Light Work?

Okay, but if we want to simulate light with a computer, we need to understand it a bit more. It behaves like particles, so we can use the concepts of particle and wave physics, but we should also observe some phenomena that are typical of light.

For example, the phenomenon of transmission. Light passes from one medium to another, for instance, from space into the Earth's atmosphere. It arrives with a certain direction, intensity, and frequency, and continues in the same direction, with the same intensity and frequency.

There's the phenomenon of reflection, which happens with a mirror; a ray is reflected by the mirror and changes direction.

The phenomenon of refraction is typical of water when a ray changes direction making it seem like a straw is broken, but it is not. It's just the optical effect of light refraction changing direction.

The phenomenon of diffraction. Here we have a ray that splits into many other rays of different intensities or frequencies. A clear example is a prism, which is also a beautiful album by a musical group. The ray enters the left side of the prism and is split into other rays of different intensities. It exits on the right side but at different frequencies, and different frequencies correspond to different colors. We perceive a different color if the frequency changes, and our eye can capture certain frequencies of electromagnetic radiation. There is a range that we see, but outside this range, there are frequencies we cannot see, such as infrared and ultraviolet.

The phenomenon of absorption. Light is completely captured by an object and disappears, but it cannot disappear. No, because we know from the principle of conservation of energy that "Nothing is created, nothing is destroyed, but everything is transformed", so this ray becomes something else, presumably it could become heat.

The phenomenon of scattering happens in clouds. The ray splits into many other rays and goes everywhere. It also happens in fog. I don't know if you've ever seen streetlights in the fog; you see this halo.

In reality, all these light phenomena do not occur distinctly and separately but all happen together, everywhere, simultaneously. For example, there are materials that can reflect more, absorb more, or transmit/refract light more. But the other phenomena still occur, even if only to a small extent.

Light Simulation

Finally, we get hands-on. Let's delve into the technical issue of real-time light simulation.

What does real-time mean? I looked it up in the dictionary because I didn't know either. So, it means a duration corresponding to the real one, thus very quickly, almost immediately. Well, at least to give us the optical illusion of continuity. Our eye can perceive something as continuous if I update it every 16 milliseconds. Every 16 milliseconds a new image, so we perceive it as continuous. 16 milliseconds allow us to make 60 images per second.

Okay, so 16 milliseconds, but we have to do a lot of calculations here. I talked about billions and billions of photons. I can't simulate all these photons because I only have 16 milliseconds. So, are we interested in all the photons? No, maybe I can focus on a reduced number of photons. Okay, and which ones interest me? Those that hit the eye. These are the ones that contribute to the image. These are the ones that actually interest me.

In computer graphics, we use the term viewpoint or the term camera, from camera or video camera. So I will use camera and eye interchangeably. How can photons reach the eye? They can arrive directly. They start from a point source and reach our eye. Or they can also be emitted by objects that have a certain volume, like LED panels. They can bounce and reach our eye. In this case, it is called indirect light. And they can bounce more and more times, an indefinite number of times.

So how do I know which photons reach my eye? This is an important question because there are photons that do not reach the eye that I want to ignore. So, it's time to talk about practical methodologies. In computer science, we call these algorithms, which in the world of cooking are called recipes.

Whitted-style Raytracing

Step number one: what do I see? I know where I am, I want to understand what is in front of me. I trace a ray, what do I hit? Maybe I hit something. Yes, I found something. Okay, let's trace more rays. Maybe I continue along this path. I trace a ray in the reflected direction and then trace other rays to reach the lights in my scene. How many lights are there? I trace as many rays as there are lights.

Okay, so I trace a reverse path. This way, I make sure to trace paths that reach the camera, so I focus only on those photons, right? Clearly, it doesn't end here, because light bounces, so the reflected ray bounces on a new object. For the new object, I can trace other rays: a reflected ray and other rays to find the light recursively, repetitively.

Great, this algorithm was created in 1980 by Turner Whitted. It's called Whitted-Style Ray Tracing. Turner Whitted was a scientist at Bell Labs. According to Americans, Bell is the inventor of the telephone, but according to us, it's Meucci. At that time, Bell Labs was part of the American Telephone and Telegraph Company.

I hope that's clear. For each bounce of light, a reflected ray and other rays towards the lights in the scene. But there's a small flaw in this algorithm. What happens if my scene has thousands and thousands of lights? My electrician went crazy and created a lot of LEDs in my room. Can I simulate them all? I could spend a lot of time tracing rays for all these lights, so I need to find a better solution. I need an algorithm that scales better with the complexity of the scene.

So, let's try a new algorithm.

Path Tracing

Let's start from scratch. What do I see? I trace a ray. This remains common, alright? I found something, but at this point, I don't trace a ray following the previous steps; instead, I trace only one, but randomly. I find something. Okay, I continue. I trace another ray in a random direction. Oh, I was lucky. I found a light along the way. But I was very lucky. This is a path, let's remember that.

However, there are rays that I choose randomly that might not hit anything in my scene; they might get lost in the void, they might miss the light. The ray is sad.

I mentioned Path, in fact, this algorithm is called Path Tracing. It was created in 1986 by Jim Kajiya. At that time, he was a professor at the California Institute of Technology, and since 1994, he is a researcher at Microsoft.

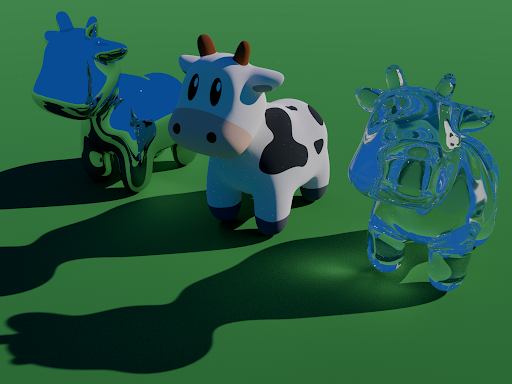

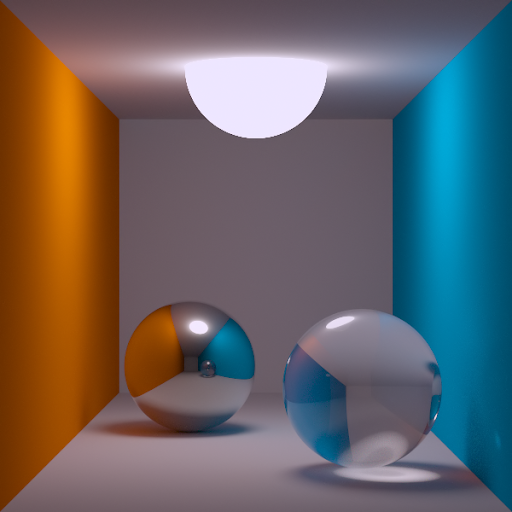

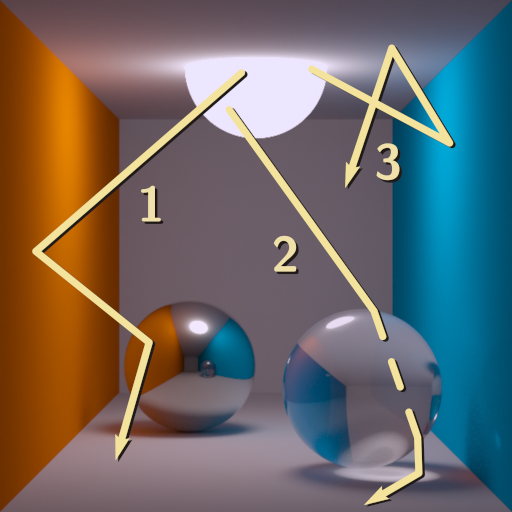

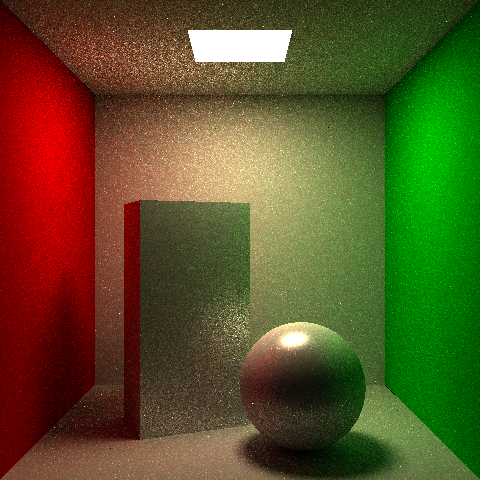

How beautiful. This is an image created with the Path Tracing algorithm. It is a scene called Cornell Box. Cornell Boxes are a family of 3D scenes that can vary in wall color or objects in the scene. I can vary them a bit as I like, but fundamentally they are 3D scenes created by academics at Cornell University. Cornell University is located in Ithaca, which is a college town in the state of New York.

These are examples of paths that light can take. Let's look at path number one. A bounce on the metal sphere and then it reaches the camera. Path number three bounces on the wall, the ceiling, and then reaches the camera. But path number two is more interesting because of the glass sphere, so we have the phenomenon of refraction. The ray is bent, passes through the sphere, and then reaches the camera.

![]()

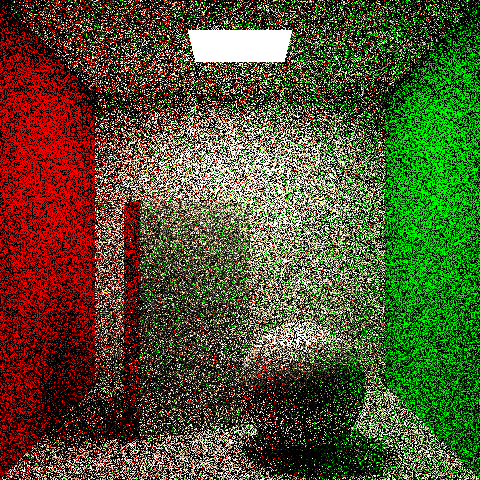

In this image, we can see how the final result improves as the number of paths I consider increases. Let's focus on image number one. You see, it's very noisy. There are many black pixels. Here, for each pixel, I made only one path, but some pixels are black. Why are they black?

I'll tell you: because, as I mentioned before, there are random paths that do not find any light along the way, so it's as if it's dark. That's why. But I can do something: maybe, for each pixel, I don't make just one path but try to make another one, and I might be luckier. This way, I increase the chances of finding the light.

In mathematics, we call these "samples", using the technical term "samples per pixel". I increase the number of samples per pixel to 2, 4, 8, 16, 32, 64, 128, and I get a more beautiful, more defined, clearer image. I found the light with a higher probability.

However, I have to consider more paths, so I have to do more calculations, which means I might exceed the budget of 16 milliseconds. I always have to be careful not to overdo it. Maybe I can focus only on some paths; I can use some techniques. Indeed, Turner Whitted had somewhat guessed right in trying to find the light as soon as possible in order to reduce the number of samples to obtain a well-defined image.

From 1986 onwards, however, algorithms have been improved, and I can achieve better results with fewer samples compared to naive ray tracing.

Maths

In short, I talked about Whitted-Style Ray Tracing, I talked about Path Tracing, which are algorithms in the Ray Tracing family. What I really mean is: Maths.

Maths. In fact, I can represent a ray with a point and a vector. The point is the origin, the vector is the direction of my ray. I represent geometries with points and indices to connect these points, like that puzzle game. For transformations, I can use matrix multiplications to enlarge, resize, rotate, and translate objects. I solve intersections between rays and geometries with systems of equations, the ray equation and the triangle equation, in this case.

Light is simulated and calculated with integrals, and here it gets a bit more complicated. But speaking of integrals, let me show you my favorite formula: the rendering equation.

How beautiful, look how beautiful it is. Okay, I'll read it to you, I'll read it simply. The light that arrives in my direction is equal to the light emitted by a certain surface, maybe a LED, and all the light that arrives on this surface and is reflected in my direction. They should teach mathematics like this in school.

Raytracing Algorithm

This is roughly how a ray tracing algorithm works. Don't worry because I'll read it to you, line by line.

=

=

=

For y that goes from zero to the height of the screen, so I can do one row at a time, okay? 0, 1, 2, 3, 4, 5, 6, 7, up to the height of the screen. Simple.

The second line, another loop, for x that goes from 0 to the width of the screen, and I do this for each row. So for the first row, all the pixels of the row. 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15.

Okay, I'll explain the rest in words. In simple terms, for each pixel, I do this: I trace a ray, see what is in front of me, if I hit something it's an intersection and I have to calculate the color. The color varies based on the material I hit and all the lights in the scene.

This is an example of the result. Up close, you can't understand anything, but from a distance you can see that it's a Cornell box. There's a red wall on the left, a green wall on the right, and there are two objects in the scene.

This algorithm is computationally expensive because you have to check for each ray if it intersects an object in the scene, and I have to check all the objects to see which one is closest, so if the complexity of the scene increases, the time I spend doing all these checks also increases. It requires a lot of memory because the entire scene has to be available in memory, because for each bounce I have to check again if I hit all the objects in my scene, and let's say a scene can weigh several MBs if not GBs, and here we are in 1986 and memory isn't like it is today.

Do I have a reasonable alternative? Maybe an algorithm that allows me to focus on one geometry at a time? Yes, I do! It's called rasterization.

So, what it actually does is focus on one geometry at a time. It loads the geometry into the graphics card, performs the necessary calculations, checks if each pixel is within the geometry, and calculates the color.

Rasterization Algorithm

Then, it unloads the geometry and loads another one, so I can focus on just one small triangle at a time, which is only a few bytes. Computationally, okay. I've already mentioned this part: it checks if a point is within a geometry and has low memory usage because it focuses on one geometry at a time.

However, the rasterization technique loses some of the phenomena that light gives us, and the trade-offs are these: I lose reflections, I lose refraction, I lose indirect light because I don't do the bounces, I lose shadows, and I also lose this beautiful phenomenon called caustics.

Caustics are those light phenomena concentrated in certain points due to the curvature of geometries, in this case, the glass.

I can achieve all these techniques with rasterization, but I have to use tricks, I have to approximate them, and they are not as faithful as ray tracing because ray tracing actually simulates the behavior of light and is very precise, giving us all these things for free.

We are approaching the conclusion because a few decades later, after the 80s, the 90s, the 2000s, the 2010s, we reach 2020 and, along with the pandemic, the first hardware products that accelerate ray tracing come to market. What these beautiful, powerful graphics cards do is calculate the intersections between rays and geometries for us.

Hardware Accelerated Raytracing

They are incredibly fast, orders of magnitude faster than the normal processor we have in our computers. So, with the latest generation smartphones, we can do amazing things.

Let me make this cameo: 'What a Time To Be Alive'!